Features

Learn about the features that will help you get your app done.

All-in-one stack

DoneJS offers everything you need to build a modern web app. It comes with a module loader, build system, MVVM utilities, full testing layer, documentation generator, server side rendering utilities, a data layer, and more. Its completeness is itself a feature.

There's no mixing and matching pieces of your stack. Just npm install and get started.

Choosing a modern stack is not at all simple or straightforward.

What types of tools do you want? Server-side rendering? What is a virtual DOM? Do I need one? MVVM or Flux? Should I set up testing infrastructure? Documentation?

Choose all your pieces. The good news is, you have many choices. The bad news is, you have many choices. React, Angular, or Vue? WebPack, rollup, or parcel? Mocha or QUnit? What tool will run my tests?

Finally, you have to make sure your chosen tools work together effectively. Does parcel work well with Angular? Does Karma work with Browserify? What about React and Babel?

DoneJS gives you a full solution. It's our mission to eliminate any ambiguity around choosing technology for building an app, so you spend less time tinkering with your stack, and more time actually building your app.

And as we've proven over the last 8 years, we'll keep updating the stack as the state of the art evolves over time.

Integrated layers

Just like Apple integrates the hardware and software for its devices, DoneJS integrates different technologies in a way that creates unique advantages that you can only get from using an integrated solution.

Cross-layer features

DoneJS makes it easier to do things that are not possible, or at best DIY, with competitor frameworks, by spanning technology layers. Here are a couple examples:

1. Server-side rendering

Server-side rendering (SSR), which you can read about in more detail in its section below, spans many layers to make setup and integration simple.

It uses zones to automatically notify the server to delay rendering, hot module swapping automatically integrates (no need to restart the server while developing), data is cached on the server and pushed to the client (http/2 server PUSH or Preload Links for http/1.1) automatically and used to prevent duplicate AJAX requests. Support for these features is only possible because of code that spans layers, including can-zone, done-ssr, CanJS, and StealJS.

By contrast, React supports SSR, but you're left to your own devices to support delaying rendering, hot module swapping, and request reuse.

2. Progressive enhancement

Parts of your application can be progressively loaded using steal.import:

import { DefineMap } from "can";

export default DefineMap.extend("AppViewModel", {

page: "string",

get pageComponent() {

let moduleName = `~/pages/${this.page}/`;

let Component = (await steal.import(moduleName)).default;

return new Component();

}

})

and then running donejs build.

DoneJS' generators define anything within ~/pages to be split into bundles. This feature spans StealJS, steal-tools, and donejs-cli.

Story-level solutions

Another advantage of the integration between DoneJS' parts is the ability to solve development problems on the level of stories rather than just features.

Solving a story means a packaged solution to a development problem, where several features across layers converge to solve the problem from start to finish. Here are several examples of stories that DoneJS solves:

Modular workflow - DoneJS makes it possible for teams to design and share components easily. Starting with generators, users can create modlets that encapsulate everything a custom element needs, easily add documentation and testing, then use npm import and export to easily share the modules with other developers, no matter what module format they're using.

Performance - DoneJS was designed from the start to solve the performance story, packaging server-side rendering, progressive loading, worker thread rendering, data layer caching, and more, all under one roof.

Developer efficiency - zero-config npm imports, hot module swapping, ES6 support

Feature comparison

-

EASY

-

GOOD

-

DIFFICULT

-

THIRD-PARTY

-

NO

| FEATURES |  |

|

|

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Performance Features

DoneJS is configured for maximum performance right out of the box.

Server-Side Rendered

DoneJS applications are written as Single Page Applications, and are able to be rendered on the server by running the same code. This is known as Isomorphic JavaScript, or Universal JavaScript.

Server-side rendering (SSR) provides two large benefits over traditional single page apps: much better page load performance and SEO support.

SSR apps return fully rendered HTML. Traditional single page apps return a page with a spinner. The benefit to your users is a noticeable difference in perceived page load performance:

Compared to other server-side rendering systems, which require additional code and infrastructure to work correctly, DoneJS is uniquely designed to make turning on SSR quick and easy, and the server it runs is lightweight and fast.

SEO

Search engines can't easily index SPAs. Server-side rendering fixes that problem entirely. Even if Google can understand some JavaScript now, many other search engines cannot.

Since search engines see the HTML that your server returns (if you want search engines to find your pages) you'll want Google and other search engines seeing fully rendered content, not the spinners that normally show after initial SPAs load.

How it works

DoneJS implements SSR with a single-context virtual DOM utilizing zones.

Single context means every request to the server reuses the same context: including memory, modules, and even the same instance of the application.

Virtual DOM means a virtual representation of the DOM: the fundamental browser APIs that manipulate the DOM, but stubbed out.

A zone is used to isolate the asynchronous activity of one request. Asynchronous activity like API requests are tracked and DoneJS' SSR will wait for all to complete, ensuring that the page is fully rendered before showing HTML to the user.

When using DoneJS SSR, the same app that runs on the client is loaded in Node. When a request comes in:

Since SSR produces fully rendered HTML, it's possible to insert a caching layer, or use a service like Akamai, to serve most requests. Traditional SPAs don't have this option.

Rather than a virtual DOM, some other SSR systems use a headless browser on the server, like PhantomJS, which uses a real DOM. These systems are much slower and require much more intensive server resources.

Some systems, even if they do use a virtual DOM, require a new browser instance entirely, or at the very least, reloading the application and its memory for each incoming request, which also is slower and more resource intensive than DoneJS SSR.

Prepping your app for SSR

Any app that is rendered on the server needs a way to notify the server that any pending asynchronous data requests are finished, and the app can be rendered.

React and other frameworks that support SSR don't provide much in the way of solving this problem. You're left to your own devices to check when all asynchronous data requests are done, and delay rendering.

In a DoneJS application, asynchronous data requests are tracked automatically. Using can-zone, DoneJS keeps a count of requests that are made and waits for all of them to complete.

View the Documentation View the Guide

Server-side rendering is a feature of done-ssr

Incremental Rendering

DoneJS applications by default employ incremental rendering to get the best possible load performance. If SEO is important go for Server-side rendering instead.

Incrementally rendering apps return an initial HTML that includes the skeleton of your application and a tiny runtime script that connects back to the server to receive and apply incremental rendering instructions.

How it works

Incremental rendering uses most of the same mechanisms as server-side rendering in DoneJS: the single context virtual DOM provided by done-ssr, zones using can-zone.

What makes incremental rendering different is that it gets an initial page to the user as quickly as possible, while it continues to render. Any changes that take place on the server after HTML is sent get streamed as mutation instructions back to the browser where they are reapplied.

_donessr_instructions/uniqueId.Progressive Loading

When you first load a single page app, you're typically downloading all the JavaScript and CSS for every part of the application. These kilobytes of extra weight slow down page load performance, especially on mobile devices.

DoneJS applications load only the JavaScript and CSS they need, when they need it, in highly optimized and cacheable bundles. That means your application will load fast.

There is no configuration needed to enable this feature, and wiring up progressively loaded sections of your app is simple.

How it works

Other build tools require you to manually configure bundles, which doesn't scale with large applications.

In a DoneJS application any components (either modlets or

.componentfiles) contained within the~/pagesfolder are progressively loaded.Then you run the build.

A build time algorithm analyzes the application's dependencies and groups them into bundles, optimizing for minimal download size.

That's it! No need for additional configuration in your JavaScript.

View the Documentation View the Guide

Progressive Loading is a feature of StealJS with additional support via the

<can-import>tag of CanJSCaching and Minimal Data Requests

DoneJS improves performance by intelligently managing the data layer, taking advantage of various forms of caching and request reduction techniques.

Undoubtedly, the slowest part of any web application is round trips to the server. Especially now that more than 50% of web traffic comes from mobile devices, where connections are notoriously slow and unreliable, applications must be smart about reducing network requests.

Making matters worse, the concerns of maintainable architecture in single page applications are at odds with the concerns of minimizing network requests. This is because independent, isolated UI widgets, while easier to maintain, often make AJAX requests on page load. Without a layer that intelligently manages those requests, this architecture leads to too many AJAX requests before the user sees something useful.

With DoneJS, you don't have to choose between maintainability and performance.

DoneJS uses the following strategies to improve perceived performance (reduce the amount of time before users see content rendered):

How it works

can-connect makes up part of the DoneJS model layer. Since all requests flow through this data layer, by making heavy use of set logic and localStorage caching, it's able to identify cache hits, even partial hits, and make the most minimal set of requests possible.

It acts as a central hub for data requests, making decisions about how to best serve each request, but abstracting this complexity away from the application code. This leaves the UI components themselves able to make requests independently, and with little thought to performance, without actually creating a poorly performing application.

Fall through caching

Fall through caching serves cached data first, but still makes API requests to check for changes.

The major benefit of this technique is improved perceived performance. Users will see content faster. Most of the time, when there is a cache hit, that content will still be accurate, or at least mostly accurate.

This benefits two types of situations. First is page loads after the first page load (the first page load populates the cache). This scenario is less relevant when using server-side rendering. Second is long-lived applications that make API requests after the page has loaded. These types of applications will enjoy improved performance.

By default, this is turned on, but can easily be deactivated for data that should not be cached.

Here's how the caching logic works:

Combining requests

Combining requests combines multiple incoming requests into one, if possible. This is done with the help of set algebra.

DoneJS collects requests that are made within a few milliseconds of each other, and if they are pointed at the same API, tries to combine them into a single superset request.

For example, the video below shows an application that shows two filtered lists of data on page load - a list of completed and incomplete todos. Both are subsets of a larger set of data - the entire list of todos.

Combining these into a single request reduces the number of requests. This optimization is abstracted away from the application code that made the original request.

Request caching

Request caching is a type of caching that is more aggressive than fallthrough caching. It is meant for data that doesn't change very often. Its advantage is it reduces both the number of requests that are made, and the size of those requests.

There are two differences between request and fallthrough caching:

Once data is in the cache, no more requests to the API for that same set of data are made. You can write code that invalidates the cache at certain times, or after a new build is released.

The request logic is more aggressive in its attempts to find subsets of the data within the cache, and to only make an API request for the subset NOT found in the cache. In other words, partial cache hits are supported.

The video below shows two example scenarios. The first shows the cache containing a superset of the request. The second shows the cache containing a subset of the request.

Inline cache and server push

Server-side rendered single page apps (SPAs) have a problem with wasteful duplicate requests. These can cause the browser to slow down, waste bandwidth, and reduce perceived performance.

DoneJS solves this problem with server push and an inline cache - embedded inline JSON data sent back with the server rendered content, which is used to serve the initial SPA data requests.

DoneJS uniquely makes populating and using the inline cache easy. Using plain XHR:

For example:

The model layer seamlessly integrates the inline cache in client side requests, without any special configuration.

While this flow would be possible in other SSR systems, it would require manually setting up all of these steps.

This video illustrates how it works.

View the Documentation View the Guide

Caching and minimal data requests is a feature of can-connect

Minimal DOM Updates

The rise of templates, data binding, and MV* separation, while boosting maintainability, has come at the cost of performance. Many frameworks are not careful or smart with DOM updates, leading to performance problems as apps scale in complexity and data size.

DoneJS' view engine touches the DOM more minimally and specifically than competitor frameworks, providing better performance in large apps and a "closer to the metal" feel.

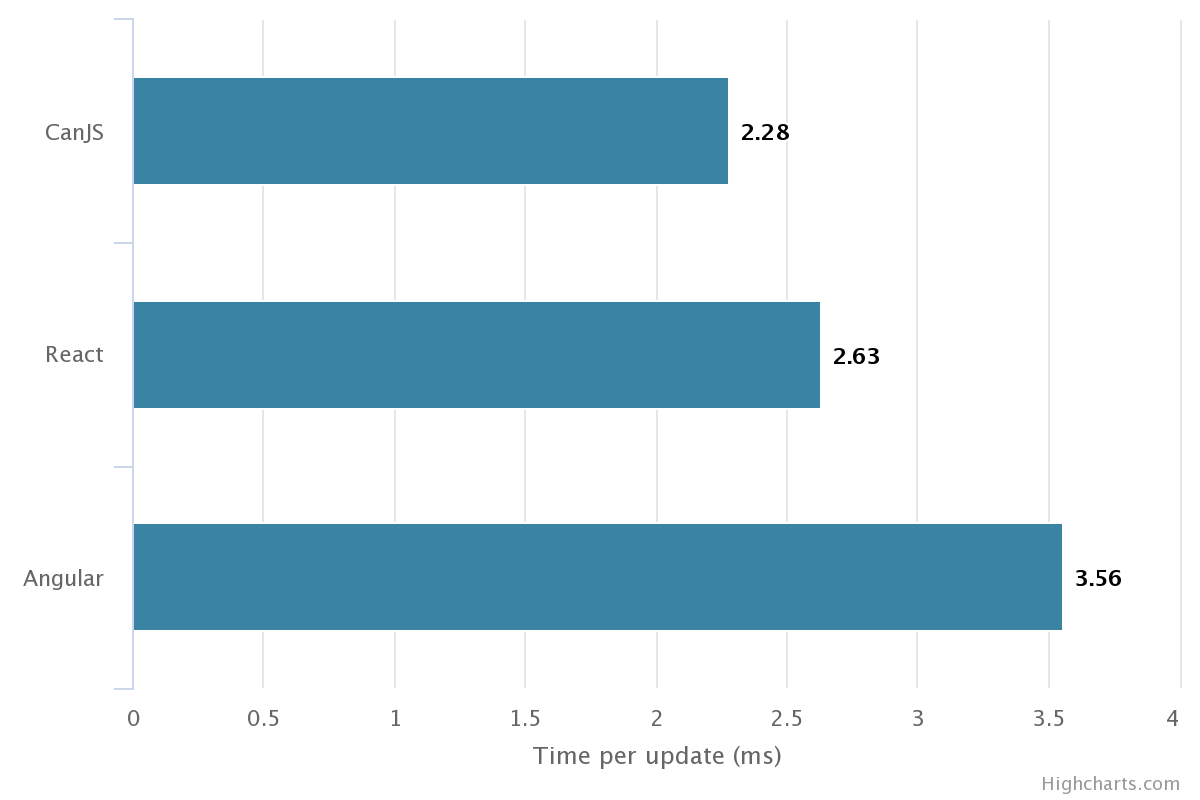

Take the TodoMVC application as an example. If you measure how long it takes DoneJS and React to render the same number of todos you'll see the performance advantage of minimal DOM updates. In fact, we did just that and here's the result:

You can run this test for yourself at JS Bin.

How it works

Consider the following template:

And the following change to its data:

In DoneJS, which uses the can-stache view engine, that would:

In Backbone, you would need to manually re-render the template or roll your own rendering library.

In Angular, at the end of the current $digest cycle, that would result in an expensive comparison between the old rows array and the new one to see what properties have changed. After the changed property is discovered, the specific DOM node would be updated.

In React, that would result in the virtual DOM being re-rendered. A diff algorithm comparing the new and old virtual DOM would discover the changed node, and then the specific DOM node would be updated.

Of these four approaches, DoneJS knows about the change the quickest, and updates the DOM the most minimally.

To see this in action run the test embedded below that shows how DoneJS, React and Angular compare when updating the DOM when a single property changes:

You can run this test yourself at JS Bin

With synchronously observable objects and data bindings that change minimal pieces of the DOM, DoneJS aims to provide the best possible mix between powerful, yet performant, templates.

can-stache Documentation DefineMap Documentation

Minimal DOM updates is a feature of CanJS

Memory Safety

Preventing memory leaks is a critical feature of any client-side framework. The biggest source of memory leaks in client-side applications is event handlers. When adding an event handler to a DOM node you have to be sure to remove that handler when the node is removed. If you do not, that DOM node will never be garbage collected by the browser.

How it works

When event listeners are created in a DoneJS application using template event binding or by binding using Controls, internally these handlers are stored. This looks like:

Internally CanJS listens for this element's "removed" event. The "removed" event is a synthetic event that will be used to:

CanJS is different from other frameworks in that it will clean up its own memory event when not using the framework to tear down DOM. For example, if you were to do:

The event listener created would still be torn down. This is because CanJS uses a MutationObserver to know about all changes to the DOM. When it sees an element was removed it will trigger the "removed" event, cleaning up the memory.

Deploy to a CDN

DoneJS makes it simple to deploy your static assets to a CDN (content delivery network).

CDNs are distributed networks of servers that serve static assets (CSS, JS, and image files). You only push your files to one service, and the CDN takes care of pushing and updating your assets on different servers across the country and globe. As your app scales CDNs will keep up with the demand, and help support users regardless if they are in New York or Melbourne.

How it works

It's widely known that CDNs offer the best performance for static assets, but most apps don't use them, mainly because its annoying: annoying to automate, configure, and integrate with your build process.

DoneJS comes with integrations with S3 and Firebase (popular CDN services) that make configuring and deploying to a CDN dirt simple.

donejs add firebasein your terminal. It asks a few questions, most of which you can accept the default answer.donejs deploy.That's it. Now when you run your server in production mode, all static assets (CSS, JS, images, etc) are served from the CDN.

Even better, you can set up continuous deployment, so that TravisCI or other tools will deploy your code, including pushing out your latest static files to the CDN, automatically.

View the Guide

Usability features

DoneJS is used to make beautiful, real-time user interfaces that can be exported to run on every platform.

iOS, Android, and Desktop Builds

Write your application once, then run it natively on every device and operating system. You can make iOS, Android, and desktop builds of your DoneJS application with no extra effort.

How it works

For iOS and Android builds, DoneJS integrates with Apache Cordova to generate a mobile app that is ready to be uploaded to Apple's App Store or Google Play.

For native desktop applications, DoneJS integrates with Electron or NW.js to create a native macOS, Windows, or Linux application.

Adding this integration is as simple as running

With these simple integrations, you can expand your potential audience without having to build separate applications.

View the Documentation View the Guide

Cordova, Electron, and NW.js integration are features of the steal-electron, steal-cordova, and steal-nw projects.

Supports All Browsers, Even IE11

DoneJS applications support Internet Explorer 11 with minimal additional configuration. You can even write applications using most ES6 features, using the built-in babel integration.

Many people won't care about this because Internet Explorer is on its way out, which is a very good thing!

But it's not quite dead yet. For many mainstream websites, banks, and e-commerce applications, IE continues to hang around the browser stats.

And while other frameworks don't support Internet Explorer at all, DoneJS makes it easy to write one app that runs everywhere.

View the Guide

Real Time Connected

DoneJS is designed to add real-time behavior to applications using any backend technology stack.

Socket.io provides the basics to add real-time capabilities to any JavaScript application, but the challenge of integrating real-time updates into your code remains.

When new data arrives, how do you know what data structures to add it to? And where to re-render? Code must be written to send socket.io data across your application, but that code becomes aware of too much, and therefore is brittle and hard to maintain.

DoneJS makes weaving Socket.io backends into your UI simple and automatic.

How it works

DoneJS' model layer uses set logic to maintain lists of data represented by JSON properties, like a list of todos with

{'ownerId': 2}. These lists are rendered to the UI via data bound templates.When server-side updates are sent to the client, items are automatically removed or added to any lists they belong to. They also automatically show up in the UI because of the data bindings.

All of this happens with about 4 lines of code.

Follow the guide to see an example in action. View the can-connect real-time documentation here.

View the Documentation View the Guide

Real time connections is a feature of the can-connect project.

Pretty URLs with Pushstate

DoneJS applications use pushstate to provide navigable, bookmarkable pages that support the back and refresh buttons, while still keeping the user on a single page.

The use of pushstate allows your apps to have "Pretty URLs" like

myapp.com/user/1234instead of uglier hash-based URLs likemyapp.com#page=user&userId=1234ormyapp.com/#!user/1234.Wiring up these pretty URLs in your code is simple and intuitive.

How it works

Routing works a bit differently than other libraries. In other libraries, you might declare routes and map those to controller-like actions.

DoneJS application routes map URL patterns, like

/user/1, to properties in our application state, like{'userId': 1}. In other words, our routes will just be a representation of the application state.This architecture simplifies routes so that they can be managed entirely in simple data bound templates, like the following example:

View the Guide

Pretty URLs and routing are features of the CanJS project.

Maintainability features

DoneJS helps developers get things done quickly with an eye toward maintenance.

Comprehensive Testing

Nothing increases the maintainability of an application more than good automated testing. DoneJS includes a comprehensive test layer that makes writing, running, and maintaining tests intuitive and easy.

DoneJS provides tools for the entire testing lifecycle:

How it works

Testing JavaScript apps is a complex task process unto itself. To do it right, you need many tools that have to work together seamlessly. DoneJS provides everything you need - the whole stack.

Generators

The DoneJS app generator command

donejs add appcreates a working project-level test HTML and JS file. Component generators viadonejs add component cartcreate a test script and individual test page for each test.Unit tests

Unit tests are used to test the interface for modules like models and view models. You can choose between BDD style unit tests with Jasmine, Mocha, or a more traditional TDD assertion style with QUnit.

Functional tests

Functional tests are used to test UI components by simulating user behavior. The syntax for writing functional tests is jQuery-like, chainable, and asynchronous, simulating user actions and waiting for page elements to change asynchronously.

Event simulation accuracy

User action methods, like click, type, and drag, simulate exactly the sequence of events generated by a browser when a user performs that action. For example this:

is not just a click event. It triggers a mousedown, then blur, then focus, then mouseup, then click. The result is more accurate tests that catch bugs early.

Even further, there are differences between how IE and Safari handle a click. DoneJS tests take browser differences into account when running functional tests.

Running tests from the command line

DoneJS comes with a command line test runner, browser launcher, and reporting tool that integrates with any continuous integration environment.

No setup required, running a DoneJS project's test is as simple as running:

You can run launch your unit and functional tests from the cli, either in headless browser mode, or via multiple real browsers. You can even launch browserstack virtual machines to test against any version of Android, Windows, etc.

The reporting tool gives detailed information about coverage statistics, and lets you choose from many different output formats, including XML or JSON files.

Mocking server APIs

Automated frontend testing is most useful when it has no external dependencies on API servers or specific sets of data. Thus a good mock layer is critical to writing resilient tests.

DoneJS apps use fixtures to emulate REST APIs. A default set of fixtures is created by generators when a new model is created. Fixtures are very flexible, and can be used to simulate error states and slow performing APIs.

Simple authoring

Several DoneJS features converge to make authoring tests extremely simple.

Because of ES6 Module support, everything in a DoneJS app is a module, so a test can simply import the modules it needs - such as fixtures and module under test:

This means the test is small, isolated, and simple. Tests themselves are modules too, so they can be collected easily into sets of tests.

Because of the modlet pattern, each component contains its own working test script and test file, which can be worked on in isolation.

Because of hot module swapping, you can write, debug, and run tests without constantly reloading your page.

Other frameworks require a build step before tests can be run. These builds concatenate dependencies and depend on the specific order of tests running, which is a brittle and inefficient workflow.

Because DoneJS uses a client side loader that makes it simple to start a new page that loads its own dependencies, there is no build script needed to compile and run tests.

You just run the generator, load your modules, write your test, and run it - from the browser or CLI.

More information

The DoneJS testing layer involves many pieces, so if you want to learn more:

Documentation

Documentation is critical for maintainability of any complex application. When your team adds developers, docs ensure minimal ramp up time and knowledge transfer.

Yet most teams either don't write docs, or they'll do it "later" - a utopian future period that is always just out of reach. Why? Because it's extra work to set up a tool, configure it, create and maintain separate documentation files.

DoneJS comes with a documentation tool built in, and it generates multi-versioned documentation from inline code comments. It eliminates the barrier to producing documentation, since all you have to do is comment your code (which most people already do) and run

donejs document.How it works

You write comments above the module, method, or object that you want to document:

Then run

donejs document. A browsable documentation website will be generated.DoneJS applications use DocumentJS to produce multi-versioned documentation. It lets you:

You can keep it simple like the example above, or you can customize your docs with many powerful features. In fact, this entire site and the CanJS site are generated using DocumentJS.

View the Documentation View the Guide

DoneJS Documentation is a feature of DocumentJS

Continuous Integration & Deployment

Continuous Integration (CI) and Continuous Deployment (CD) are must have tools for any modern development team.

CI is a practice whereby all active development (i.e. a pull request) is checked against automated tests and builds, allowing problems to be detected early (before merging the code into the release branch).

CD means that any release or merges to your release branch will trigger tests, builds, and deployment.

Paired together, CI and CD enable automatic, frequent releases. CD isn't possible without CI. Good automated testing is a must to provide the confidence to release without introducing bugs.

DoneJS provides support for simple integration into popular CI and CD tools, like TravisCI and Jenkins.

How it works

Setting up continuous integration and deployment involves several steps:

Steps 1, 2, and 3 are the hard parts. Step 4 is simple. DoneJS supports in two main ways: proper test support and simple CLI commands.

Proper test support

DoneJS comes with comprehensive support for testing. The Testing section contains much more detail about testing support.

Generators create working test scripts right off the bat, and the plumbing for test automation is built into each project. Each modlet contains a skeleton for unit tests. All that is left for the developer to do is write tests.

Simple CLI commands

Another hurdle is creating automated build, test, and deployment scripts. Every DoneJS app comes with a build, test, and deployment one-liner:

donejs build,donejs test, anddonejs deploy.Tool integration

Once the tests are written and the scripts are automated, integrating with the tools that automatically runs these scripts is quite simple. For instance, setting up Travis CI involves signing up and adding a

.travis.ymlfile to the project:View the CI Guide View the CD Guide

Modlets

The secret to building large apps is to never build large apps. Break up your application into small pieces. Then, assemble.

DoneJS encourages the use of the modlet file organization pattern. Modlets are small, decoupled, reusable, testable mini applications.

How it works

Large apps have a lot of files. There are two ways to organize them: by type or by module.

Organization by module - or modlets - make large applications easier to maintain by encouraging good architecture patterns. The benefits include:

testsfolder that is more easily ignored.An example modlet from the in-depth guide is the order/new component. It has its own demo page and test page.

DoneJS generators create modlets to get you started quickly. To learn more about the modlet pattern, read this blog post.

View the Video View the Guide

Modlets are a feature of DoneJS generators.

npm Packages

DoneJS makes it easy to share and consume modules via package managers like npm and Bower.

You can import modules from any package manager in any format - CommonJS, AMD, or ES6 - without any configuration. And you can convert modules to any other format.

The goal of these features is to transform project workflows, making it easier to share and reuse ideas and modules of functionality across applications, with less hassle.

How it works

DoneJS apps use StealJS to load modules and install packages. This video introduces npm import and export in StealJS:

Zero config package installation

Unlike Browserify or Webpack, StealJS is a client side loader, so you don't have to run a build to load pages.

Installing a package in a DoneJS app via npm or bower involves no configuration. Install your package from the command line:

Then immediately consume that package (and its dependencies) in your app:

Using require.js or other client side loaders, you'd have to add pathing and other information to your configuration file before being able to use your package. In DoneJS, this step is bypassed because of scripts that add config to your package.json file as the package is installed.

You can import that package in any format: CommonJS, AMD, or ES6 module format.

Convert to any format

DoneJS supports converting a module to any other format: CommonJS, AMD, or ES6 module format, or script and link tags.

The advantage is that you can publish your module to a wider audience of users. Anyone writing JavaScript can use your module, regardless of which script loader they are using (or if they aren't using a script loader).

Just create an export script that points to the output formats you want, along with some options:

and run it from your command line:

Modular workflow

In combination with other DoneJS features, npm module import and export make it possible for teams to design and share components easily.

Generators make it easy to bootstrap new modules of functionality quickly, and the modlet pattern makes it easy to organize small, self-contained modules. It's even easy to create tests and documentation for each module.

DoneJS enables a modular workflow, where pieces of small, reusable functionality can be easily created, shared, and consumed.

Similar to the way that the microservices architecture encourages reuse of APIs across applications, the modular workflow encourages reuse of self-contained modules of JavaScript across applications.

Imagine an organization where every app is broken into many reusable pieces, each of which is independently tested, developed, and shared. Over time, developers would be able to quickly spin up new applications, reusing previous functionality. DoneJS makes this a real possibility.

View the Documentation View the Guide

npm package support is a feature of StealJS

ES6 Modules

DoneJS supports the compact and powerful ES6 module syntax, even for browsers that don't support it yet. Besides future proofing your application, writing ES6 modules makes it easier to write modular, maintainable code.

How it works

DoneJS applications are actually able to import or export any module type: ES6, AMD and CommonJS. This means you can slowly phase in ES6, while still using your old code. You can also use any of the many exciting ES6 language features.

A compiler is used to convert ES6 syntax to ES5 in browsers that don't yet support ES6. During development, the compiler runs in the browser, so changes are happening live without a build step. During the build, your code is compiled to ES5, so your production code will run natively in every browser. You can even run your ES6 application in IE11+!

View the Documentation View the Guide

Pretty URLs and routing are features of the stealjs/transpile project.

Custom HTML Elements

One of the most important concepts in DoneJS is splitting up your application functionality into independent, isolated, reusable custom HTML elements.

The major advantages of building applications based on custom HTML elements are:

Just like HTML's natural advantages, composing entire applications from HTML building blocks allows for powerful and easy expression of dynamic behavior.

How it works

First, it's important to understand the background of custom elements and their advantages. Then, we'll discuss the details of creating powerful custom elements in specifically DoneJS, and why they're special.

Benefits of custom elements

Before custom HTML elements existed, to add a datepicker to your page, you would:

With custom HTML elements, to add the same datepicker, you would:

That might seem like a subtle difference, but it is actually a major step forward. The custom HTML element syntax allows for instantiation, configuration, and location, all happening at the same time.

Custom HTML elements are another name for Web Components, a browser spec that has been implemented across browsers.

Benefits of DoneJS custom elements

DoneJS uses CanJS' can-component to provide a modern take on web components.

Components in DoneJS have three basic building blocks:

There are several unique benefits to DoneJS custom elements:

.componentfile, or a modletDefining a custom element

One way to define a component is using a DoneJS component style declaration, using a single-file with a

.componentextension:This simple form of custom elements is great for quick, small widgets, since everything is contained in one place.

Another way to organize a custom element is a modlet style file structure: a folder with the element broken into several independent pieces. In this pattern, the custom element's ViewModel, styles, template, event handlers, demo page, tests, and test page are all located in separate files. This type of custom element is well suited for export and reuse.

DoneJS Generators will create both of these types of custom elements so you can get started quickly.

Data elements + visual elements = expressive templates

The beauty and power of custom HTML elements are most apparent when visual widgets (like graphing) is combined with elements that express data.

Custom element libraries

Custom elements are designed to be easily shareable across your organization. DoneJS provides support for simple npm import and export and creating documentation for elements. Together with custom element support, these features make it easier than ever to create reusable bits of functionality and share them.

Some open source examples of DoneJS custom elements:

bit-c3 bit-tabs bit-autocomplete

Check out their source for good examples of shareable, documented, and tested custom elements.

In-template dependency declarations

can-import is a powerful feature that allows templates to be entirely self-sufficient. You can load custom elements, helpers, and other modules straight from a template file like:

View the Documentation View the Guide

Custom HTML elements are a feature of CanJS

MVVM Architecture

DoneJS applications employ a Model-View-ViewModel architecture pattern, provided by CanJS.

The introduction of a strong ViewModel has some key advantages for maintaining large applications:

How it works

The following video introduces MVVM in DoneJS, focusing on the strength of the ViewModel with an example.

DoneJS has a uniquely strong ViewModel layer compared to other frameworks. We'll discuss how it works and compares it to other frameworks.

MVVM overview

Models in DoneJS are responsible for loading data from the server. They can be reused across ViewModels. They often perform data validation and sanitization logic. Their main function is to represent data sent back from a server. Models use an intelligent set logic that enables real-time integration and caching techniques.

Views in DoneJS are templates. Specifically, templates that use handlebars syntax, but with data bindings and rewritten for better performance. Handlebars templates are designed to be logic-less.

ViewModels will be covered in detail below.

Independent ViewModels

The first reason DoneJS ViewModels are unique is their independence. ViewModels and Views are completely decoupled, and can be developed completely isolated from a template.

For example, here's a typical ViewModel, which is often defined in its own separate file like

viewmodel.jsand exported as its own module:The template (view) lives in its own file, so a designer could easily modify it without touching any JavaScript. This template renders the ViewModel property from above:

A custom HTML element, also known as a component, would be used to tie these layers together:

The ViewModel is defined as its own module and exported as an ES6 module, so it can be imported into a unit test, instantiated, and tested in isolation from the DOM:

In other frameworks, ViewModels don't enjoy this level of independence. Every React class has a render function, which is essentially a template, so the View, ViewModel, and component definition are typically part of the same module. Every Angular directive is a ViewModel. In DoneJS, separating the ViewModel, template, and custom element is encouraged, making each module more decoupled and easier to unit test.

Powerful observable data layer

A powerful observable data layer binds the layers together with very minimal code.

DoneJS supports the following features:

Direct observable objects - changes to a property in an object or array immediately and synchronously notify any event listeners.

Computed properties - ViewModels can define properties that depend on other properties, and they'll automatically recompute only when their dependent properties change.

Data bound templates - templates bind to property changes and update the DOM as needed.

In the simple ViewModel example above,

fullName's value depends onfirstandlast. If something in the application changesfirst,fullNamewill recompute.fullNameis data bound to the view that renders it:If

firstis changed:fullNamerecomputes, then the DOM automatically changes to reflect the new value.The interplay of these layers provides amazing power to developers. ViewModels express complex relationships between data, without regard to its display. Views express properties from the ViewModel, without regard to how the properties are computed. The app then comes alive with rich functionality.

Without automatic ties connecting these layers, achieving the same

fullNamefunctionality would require more code explicitly performing these steps. There would need to be communication between layers, removing the isolation achieved above. Any change tofirstwould need to notify ViewModel'sfullNameof a change. Any change tofullNamewould need to tell the view to re-render itself. These dependencies grow and quickly lead to unmaintainable code.In Angular, there are no direct observables. It uses dirty checking with regular JavaScript objects, which means at the end of the current $digest cycle, it will run an algorithm that determines what data has changed. This has performance drawbacks, as well as making it harder to write simple unit tests.

In React, there is no observable data layer. You could define a

fullNamelike we showed above, but it would be recomputed every timerenderis called, whether or not it has changed. Though it's possible to isolate and unit test its ViewModel, it's not quite set up to make this easy.More information

To learn more:

The MVVM architecture in DoneJS is provided by CanJS.

Hot Module Swapping

Getting and staying in flow is critical while writing complex apps. In DoneJS, whenever you change JavaScript, CSS, or a template file, the change is automatically reflected in your browser, without a browser refresh.

How it works

Live reload servers generally watch for file changes and force your browser window to refresh. DoneJS doesn’t refresh the page, it re-imports modules that are marked as dirty, in real-time.

The correct terminology is actually hot swapping, not live reload. Regardless of what it's called, the result is a blazing fast development experience.

There is no configuration needed to enable this feature. Just start the dev server and begin:

View the Documentation

Live reload is a feature of StealJS.

Generators

DoneJS generators help you kickstart new projects and components. They'll save you time, eliminating boilerplate by scaffolding a working project, component, or module.

Generator templates set up many of the best practices and features discussed in the rest of this page, without you even realizing it.

How it works

The DoneJS generator uses Yeoman to bootstrap your application, component, or model.

There are four generators by default (and you can easily create your own).

Project generator

From the command line, run:

You'll be prompted for a project name, source folder, and other setup information. DoneJS' project dependencies will be installed, like StealJS and CanJS. In the folder that was created, you'll see:

You're now a command away from running application wide tests, generating documentation, and running a build. Start your server with

donejs develop, open your browser, and you'll see a functioning, server-side rendered hello world page.Modlet component generator

To create a component organized with the modlet file organization pattern:

It will create the following files:

This folder contains everything a properly maintained component needs: a working demo page, a basic test, and documentation placeholder markdown file.

Simple component generator

For simple, standalone components:

Which will generate a working component in a single file.

Model generator

To create a new model:

This will create:

modelsfolderView the Documentation View the Guide

Generators are provided by the Generator DoneJS project with additional support via the donejs-cli project